WP Statistics Exclusions Page

On this page, you will find various settings that allow you to exclude certain types of data from being recorded and displayed in your statistics.

The settings available on the Exclusions page include:

- User Role Exclusions

- IP/Robot Exclusions

- GeoIP Exclusions

- Host Exclusions

- Site URL Exclusions

Each of these settings provides a different way to fine-tune your statistics and exclude any unwanted data. By using the options available on this page, you can ensure that your statistics accurately reflect the data that is most important to you.

For accessing the Exclusions page navigate to Statistics → Settings → Exclusions.

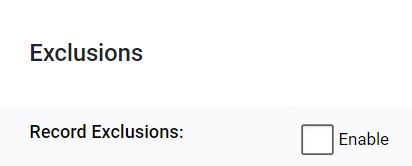

At first, you will have the Record Exclusions option to enable. This setting will keep track of all the excluded data in a separate table, along with the reasons for the exclusion. However, it will not include any other information besides the reason for exclusion. This option can generate a large amount of data, including not just actual user visits but also all the hits to your site, including the excluded ones.

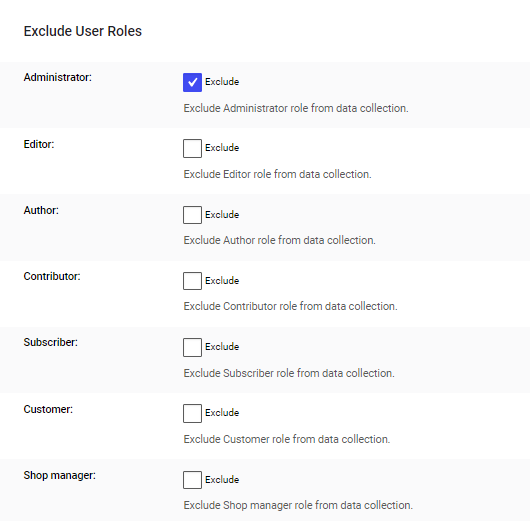

User Role Exclusions

The User Role Exclusions option in the WP Statistics plugin allows you to exclude certain user roles from data collection. This can be useful if you want to exclude certain types of users from your statistics, such as administrators or editors.

One of the key use cases for this feature is when you have a test or development environment, in which you have users with admin or editor roles that are not real users but they are triggering data collection to your statistics. By excluding them you will be able to have more accurate data on your website’s statistics.

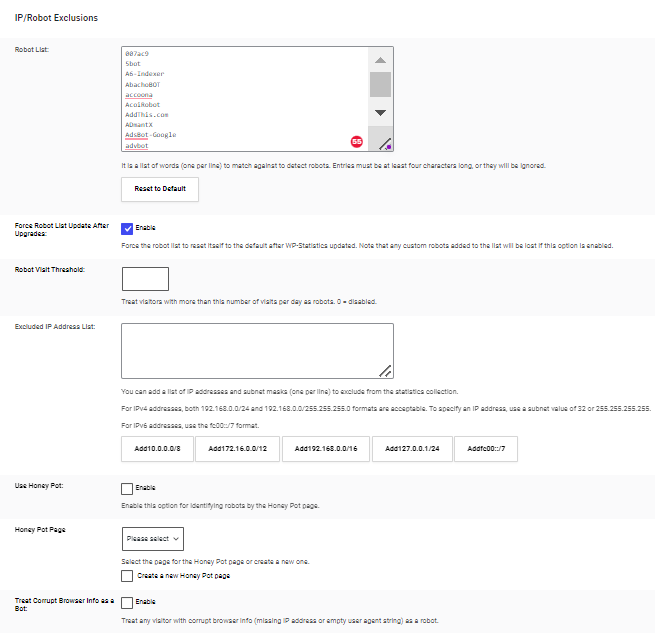

IP/Robot Exclusions

IP/Robot Exclusions

Process of excluding certain IP addresses or user agents from being tracked or blocked by a website or web application. This can improve website performance, security, and functionality by excluding unnecessary traffic, and unwanted visitors, and ensuring access to specific content or features to certain groups of users.

Force Robot List Upgrades

The option allows website administrators to automatically reset the list of blocked or excluded IP addresses or user agents to the default after an update of the WP Statistics plugin. This ensures that the website or application is protected with the latest version of the plugin’s security features, but it also means that any custom robots added to the list will be lost if this option is enabled. It’s an option that allows the website administrator to keep the website or application up-to-date and secure without the need for manual updates.

Robot Visit Threshold

A setting that determines the number of visits from a robot before it is considered a “real” visit and is recorded in the website’s statistics. This allows website administrators to filter out noise caused by search engine crawlers and other automated bots that may inflate website traffic statistics. This threshold can be set to a specific number of visits, and any robots that exceed that number will be recorded as a real visit.

Excluded IP Address List

A feature that allows website administrators to exclude certain IP addresses from being tracked by the website, improving website performance and security. This list can be used to exclude unwanted traffic or to grant access to specific content or features for specific groups of users.

Use Honey Pot

This option is a technique used to identify robots by using a “Honey Pot” page. When enabled, it adds a hidden page on the website that is only accessible to robots, not human visitors. When a robot accesses this page, it is identified as such and can be blocked or excluded from the website’s statistics. This technique can be used to improve website performance and security by filtering out unwanted traffic caused by search engine crawlers and other automated bots.

Treat Corrupt Browser Info

As a Bot option is a security feature that allows website administrators to identify and block visitors with corrupt browser information. When enabled, it considers any visitor with a missing IP address or empty user agent string as a robot. This is useful as some malicious bots may try to hide their identity by providing corrupt browser information in order to access the website. By treating these visitors as robots, the website can block their access and improve its security.

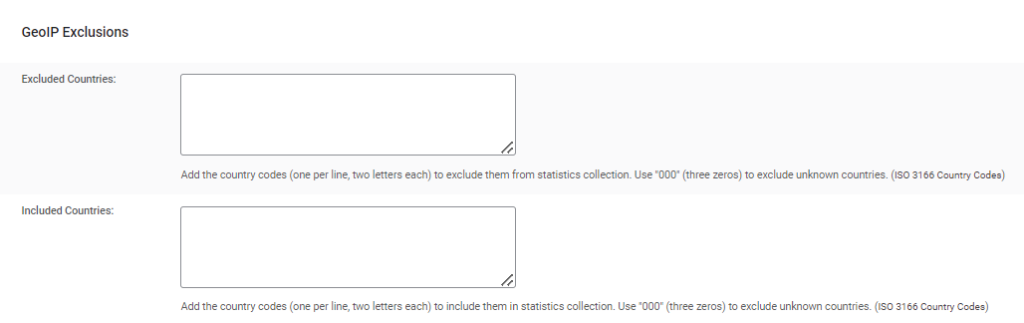

GeoIP Exclusions

GeoIP Exclusions is a feature that allows website administrators to exclude certain geographic locations from being tracked or blocked by the website. This can be useful for a variety of reasons, such as blocking unwanted traffic from certain countries or regions or only allowing access to specific content or features for certain geographic locations. By excluding certain geographic locations, website administrators can improve website performance and security, and also target a specific audience with their content or services. It’s based on IP address recognition, and it’s useful to make sure that the website or the application is accessible to the right users.

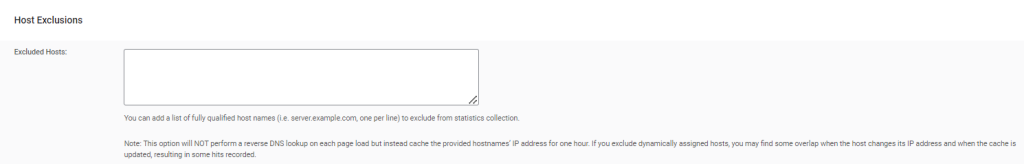

Host Exclusions

Host Exclusions is a feature that allows website administrators to exclude certain hostnames or domain names from being tracked or blocked by the website. This can be useful for a variety of reasons, such as blocking unwanted traffic from certain websites or networks or only allowing access to specific content or features for certain hosts or domains.

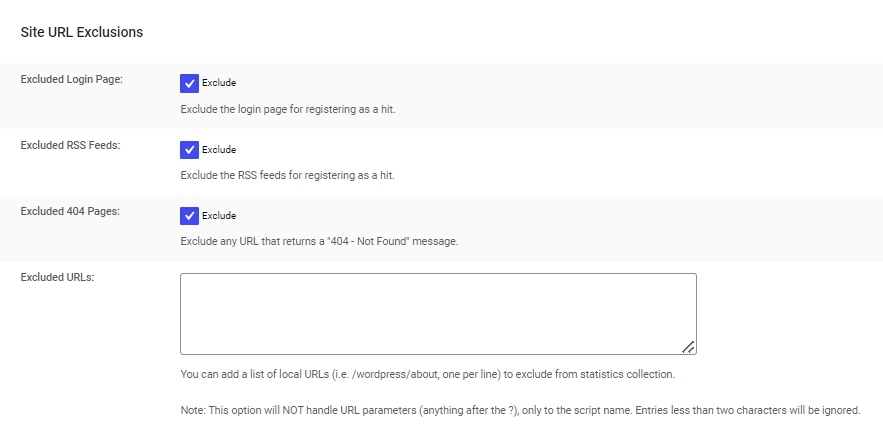

Site URL Exclusions

Site URL Exclusions is a feature that allows website administrators to exclude certain pages or sections of their website from being tracked or blocked. This can be useful for a variety of reasons, such as blocking unwanted traffic from certain pages or sections or only allowing access to specific content or features for certain pages or sections.

Website administrators can choose from three options:

- Login page: exclude the login page from being tracked, as it is mostly for internal use.

- RSS feeds: exclude the RSS feed pages from being tracked, as they are mostly for programmatic use and not for human visitors.

- 404 pages: exclude the 404 error pages from being tracked, as they are mostly caused by broken links or mistyped URLs.

Additionally, website administrators can manually enter specific URLs that they want to exclude from being tracked.